🥷 [S2] Challenge 21

Just KNIME It, Season 2 / Challenge 21 reference

Challenge question

Challenge 21: Help the Caddie (Part 1)

Level: Medium

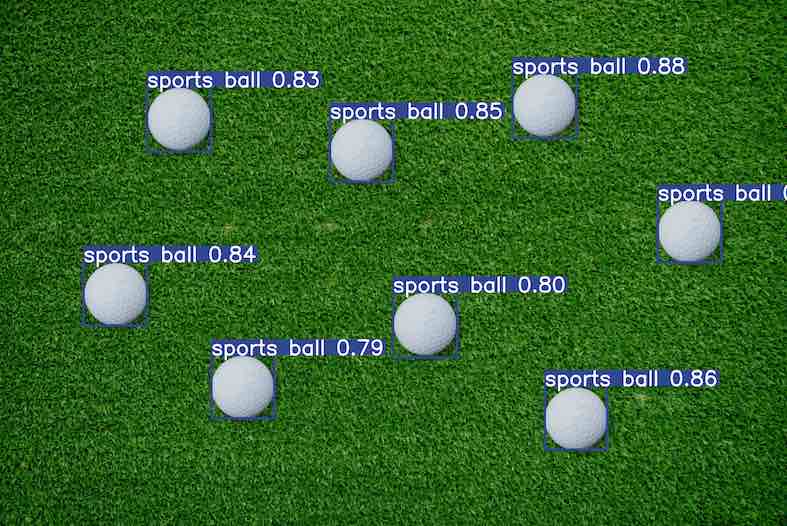

Description: Caddie Tom, who assists one of the most popular golf players, has asked for your help. He is tired of looking for golf balls in the field -- distinguishing between white balls and green grass can be tiresome! So he wants you to make a workflow that will help him identify golf balls on images he took from the field. Your task then is to segment the images containing golf balls. Hint: The KNIME Image Processing extension is very important for this challenge.

Author: Daria Liakh

Dataset: Golf Ball Data on the KNIME Hub

CV

Computer Vision is a highly mature field. There are classic image processing algorithms such as erosion and dilation in morphology, image compression, edge detection, SIFT, and more. With the popularity of deep learning, the maturity of this field has increased even further.

In the early days, detecting an object often required finding its features and matching them on the image, followed by setting thresholds for filtering. However, in most cases, this is no longer necessary. Old-school algorithms like ResNet and YOLO seem to have become less popular (although I'm not entirely sure, considering YOLO already has version 8). The name of Meta's SAM algorithm, which stands for Segment Anything, also indicates the maturity of this field.

Instead of using KNIME's image extension, we directly use YOLOv5 here. I won't describe the installation process, but you can refer to the official documentation for the latest instructions if you can't run workflow by 'Conda Environment Propagation' node.

After installation, you only need to use a Python node:

import torch

# Model

model = torch.hub.load('ultralytics/yolov5', 'yolov5s')

# Image

im = 'https://api.hub.knime.com/repository/Users/alinebessa/Just%20KNIME%20It!%20Season%202%20-%20Datasets/Challenge%2021%20-%20Dataset/Golf%20balls.jpg:data?spaceVersion=-1'

# Inference

results = model(im)

results.save()

That's it! The image will be saved in the folder where the workflow is located.

It may be a bit hard to imagine, but, welcome to the real world.

Any thoughts?

- Maybe the SAM model could be a bit larger and more complex? Haven't tried it yet.

- Can you guess where the downloaded YOLO model is placed?

- How can we directly display the generated images in KNIME?